I decided to talk a walk on the wild-side and examined R as a GIS for spatial analysis. I hope to use several of R's spatial statistics packages and to automate tasks--staying within one program. I highly recommend Brunsdon and Comber's book (

$50 on Amazon, Paperback, electronic versions also available).

About the Authors: Chris Brunsdon is the creator of geographically weighted regression or GWR. Lex Comber is a professor at Leeds University.

Four Reasons to choose R as a GIS

1) You are interested in performing tailored exploratory spatial data analysis (ESDA), spatial statistics, regression analysis, and diagnostics.

- Of course, R is also way better than ArcGIS and QGIS for summary statistics too. (Notably, QGIS has integrated a R processing toolbox into it. ArcGIS also has an official bridge to R.)

2) You already use R for non-spatial data, have lots of code written, and need to analyze spatial data.

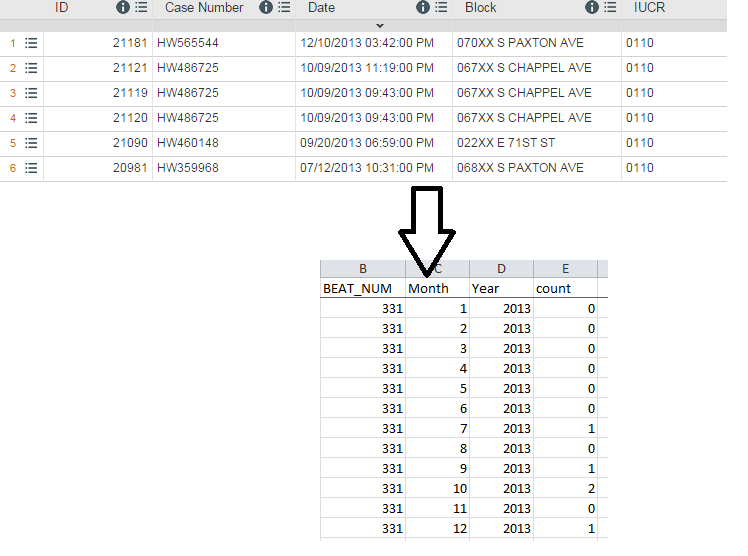

3) You do not want to export your data (or results) from one program into another and back again!

4) You want to be able to publish or share your code with a wider audience.

|

| A great cover to a great book! |

Reader Accessibility

The content is extremely well-presented, clear and concise, and includes color graphics. It is not overly technical. Still, R as a GIS and spatial analysis are tough material and is definitely not for the faint-of-heart. The authors assume readers may not have either a R or GIS background, or both. I took a R class in graduate school and occasionally use it.

Additional packages that assist in manipulating and reshaping data, such as

plyr, are also discussed. The authors also warn readers that R packages can change over time, causing error messages, but many warn users about recent and upcoming changes.

Overview

In the first 40 pages, you will learn R basics, if you don't already have a foundation. Next, you will learn GIS fundamentals, how to plot data to create a map, taking into account scale, and adding and positioning common map elements like a north arrow and scale bar. This may sound basic but in R nothing is easy! Of course, the advantage with code is that you can reuse it or may only need to modify it slightly for many maps.

Late in Chapter 5-6 the book dives into spatial analysis. The last few chapters are probably the best of the book, as more advanced statistical techniques are discussed including local indicators of spatial auto correlation (LISAs), geographically weighted summary statistics and regression.

The book providers a great guide and reference, and I am sure I will be re-visiting it frequently! Overall, it is a great mix of practice and theory.

Disclosures:

None, I found and purchased the book on my own.